Contribute specific amount of storage as slave to the Hadoop Cluster…

😀 Hello Readers,

In this blog, I would like to contribute specific amount of storage in slave node to the Hadoop Cluster,. And the Hint is “ Linux Partitions ”..

🤔 Can we solve this situation using Linux Partitions ?

Yes, we can do this by using the concept of LVM ( Logical Volume Manager )

❓ What is LVM ( Logical Volume Manager ) ?

LVM, or Logical Volume Management, is a storage device management technology that gives users the power to pool and abstract the physical layout of component storage devices for easier and flexible administration. Utilizing the device mapper Linux kernel framework, it can be used to gather existing storage devices into groups and allocate logical units from the combined space as needed.

The main advantages of LVM are increased abstraction, flexibility, and control. Logical volumes can have meaningful names like “databases” or “root-backup”. Volumes can be resized dynamically as space requirements change and migrated between physical devices within the pool on a running system or exported easily.

LVM Architecture and Terminology:

LVM Storage Management Structures:

LVM functions by layering abstractions on top of physical storage devices. The basic layers that LVM uses, starting with the most primitive, are.Physical Volumes:

Physical block devices or other disk-like devices (for example, other devices created by device mapper, like RAID arrays) are used by LVM as the raw building material for higher levels of abstraction. Physical volumes are regular storage devices. LVM writes a header to the device to allocate it for management.

Volume Groups:

LVM combines physical volumes into storage pools known as volume groups. Volume groups abstract the characteristics of the underlying devices and function as a unified logical device with combined storage capacity of the component physical volumes.

Logical Volumes:

A volume group can be sliced up into any number of logical volumes. Logical volumes are functionally equivalent to partitions on a physical disk, but with much more flexibility. Logical volumes are the primary component that users and applications will interact with.

Let’s do the practical,

📌 In this practical, I’ve already created a Hadoop Cluster with one master node & one slave node on AWS Cloud …

📌 run the below command to see how much storage, slave node is contributing to the Hadoop Cluster …

$ hadoop dfsadmin -report

📌 Currently, my slave node is contributing around 10 GiB,.. For doing this practical, I’m going to attach a new EBS volume of 20 GiB …

📌 In AWS, go to EC2 Service >> Volumes >> Click on create volume .. And give the name tag to that volume, So that we can easily identify the volume …

📌 Now attach that volume to the slave node by clicking on “ Attach Volume ” in Actions button …

📌 Now go to console of slave node and run the below command to see whether a new volume is attached or not ,.

# To display all Hard Disks in your system.$ fdisk -l

📌 U will see a new Disk called /dev/xvdf with 20 GiB storage in above image …

📌 Before creating physical volume, U have to install a package/software for managing Logical Volumes … Here is the command to install the software:

$ yum install lvm2–8:2.03.02–6.el8.x86_64📌 Now create a physical volume for that disk using below cmd

# To create a new Physical Volume$ pvcreate /dev/xvdf

📌 Now verify whether physical volume is created or not using below cmd

# To display all Physical Volumes$ pvdisplay

or

$ pvdisplay /dev/xvdf

📌 Now create a Volume Group for that Physical Volume with a unique name

# Create a Volume group for particular Physical Volumes ...

# Note: We can add one or more than one physical volumes to a volume group ...$ vgcreate cluster-vg /dev/xvdf

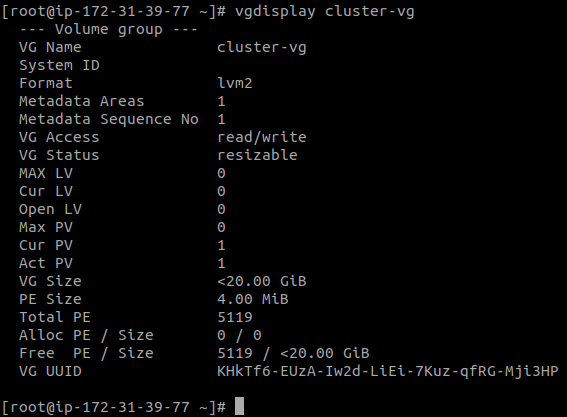

📌 Cross check with the below command

# To display Volume Group info $ vgdisplay cluster-vg

📌 Now create a Logical volume from the volume group which is created before using the command,

# Create a new Logical Volume with size 10GiB and unique name$ lvcreate --size 10G --name mylv cluster-vg

📌 Cross check with the below command

# Display the Logical Volume $ lvdisplay cluster-vg/mylv

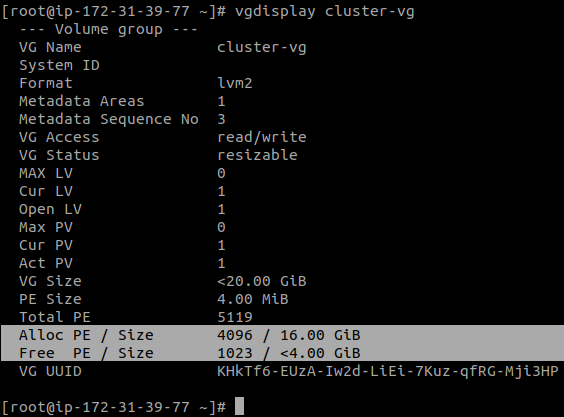

📌 Also check in volume group, it will show how much free storage it has , and how much storage it has allocated to logical volume …

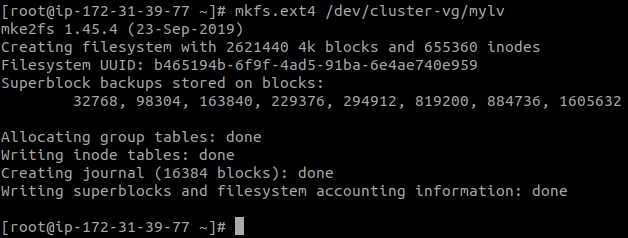

📌 Finally, we created a new partition/Logical Volume with 10GiB in size … After creating a partiton, we need to format that partition and then mount to folder …

📌 Now attach that formatted partition/Logical Volume to a folder which is already attached to slave node … See the folder attached to slave node by running below cmd

$ cat /etc/hadoop/hdfs-site.xml

👉 In my case, the folder name is /dn1 … Attach mylv Logical Volume to /dn1 folder …

$ mount /dev/cluster-vg/mylv /dn1/👉 Now you will see a new hard disk is attached to /dn1 folder, So that we Contributed 10GiB storage to the Hadoop cluster ,..

📌 The Interesting thing is, we can resize the storage on the fly without stopping the cluster …

Let’s resize the volume to 16GiB by running the below commands :

lvextend --size +6G /dev/cluster-vg/mylv

👉 Cross check the volume group how much mylv logical volume is extended ..

👉 Now run

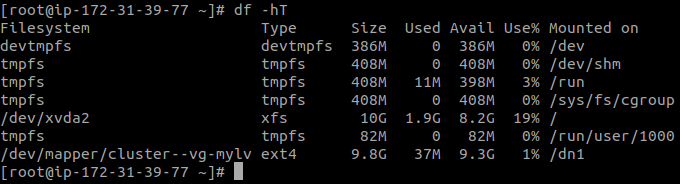

$ df -hT

🤔 Why the extended volume is not showing ?

✅ Becoz, intially we have 10GiB storage now we have added another 6GiB to the same logical volume,.. Before using any hard disk , first we need to format it.. Then only we can use that storage , Otherwise we can’t use …

👉 Now resize the logical volume to update the extended storage of 6GiB in mylv logical volume …

$ resize2fs /dev/cluster-vg/mylv

👉 Now again run

$ df -hT

📌 U will see the total storage as 16GiB in the file system of /dev/mapper/cluster-vg-mylv …

👉 Also see the Hadoop Cluster Storage by running the below command :

$ hadoop dfsadmin -report

📌 You will see the around 16GiB storage is contributed to Hadoop Cluster,.. This is how we can contribute specific storage to Cluster on the fly without stopping the cluster …

🙌 !!! That’s all Folks !!! 🙌

🤝 Thanks for reading, ..

…. Signing Off ….