Create webserver using AWS EC2 with persistent storage EFS … (Updated version of Task1)

I have already created Webserver using EC2 instance in Task1 by writing the code in terraform for automation purpose … In that task , I used EBS for storing the data … EBS is a Ephemeral storage (not a persistent storage) … If we delete the instance , then automatically the EBS volume which is attached to that Instance will also deleted … Now , for persistent storage purpose , we are going to use AWS EFS storage …

Here is the link for Task1 blog in which I have used EBS (Elastic Block Storage) volume for storing the data …

Steps to create webserver using EC2 instance with a persistent storage called EFS ( Elastic File System ) …

- Create/launch Application using Terraform

→Create Security group which allows port 80 and NFS port .

→Launch EC2 instance.

→In this Ec2 instance use the existing key or provided key and security group which we have created in step 1.

→Launch one Volume using the EFS service and attach it in your vpc, then mount that volume into /var/www/html

→Developer have uploded the code into github repo also the repo has some images.

→Copy the github repo code into /var/www/html

→Create S3 bucket, and copy/deploy the images from github repo into the s3 bucket and change the permission to public readable.

→Create a Cloudfront using s3 bucket(which contains images) and use the Cloudfront URL to update in code in /var/www/html

Now Let’s create the webserver step by step …

- Step 1: Configure the aws using the cmd : aws configure

- Step 2: Providing AWS user credentials in terraform code .. Also mention the region where u have to launch webserver …

provider "aws" {

region = "ap-south-1"

profile = "task171"

}- Step 3: Creating Key pair using terraform code … In Task1 , I have used the key pair which is already created…

Now , I am going to create key pair using terraform code …It will create key pair named as “ terrakey.pem ”

Here is the code ..

resource "tls_private_key" "my_instancekey" {

algorithm = "RSA"

rsa_bits = 4096

}resource "local_file" "key_gen" {

content = tls_private_key.my_instancekey.private_key_pem

filename = "terrakey.pem"

file_permission = 0400

}resource "aws_key_pair" "instance_key" {

key_name = "terrakey"

public_key = tls_private_key.my_instancekey.public_key_openssh

}

- Step 4: Creating Security group which allows port 80 and NFS port.

Here is the code ….

resource "aws_security_group" "sg" {

name = "nfs-task2"

description = "Allow HTTP SSH NFS"

vpc_id = "vpc-f2c6d99a" ingress {

description = "HTTP"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

} ingress {

description = "NFS"

from_port = 2049

to_port = 2049

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "SSH"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = [ "0.0.0.0/0" ]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

} tags = {

Name = "mysecurity_http"

}

}

- Step 5: Creating EFS storage and also mount the EFS to One subnet in ur VPC and Security group which u have created before ….

Here is the code …

resource "aws_efs_file_system" "efs" {

creation_token = "myefs"tags = {

Name = "EFS_storage"

}

}resource "aws_efs_mount_target" "mountEFS" {

file_system_id = "${aws_efs_file_system.efs.id}"

subnet_id = "subnet-1f3c4953"

security_groups = [ aws_security_group.sg.id ]

depends_on = [ aws_efs_file_system.efs ]

}

- Step 6: Now Launch the EC2 Instance …

resource "aws_instance" "os" {

ami = "ami-0447a12f28fddb066"

instance_type = "t2.micro"

key_name = "terrakey"

subnet_id = "subnet-1f3c4953"

vpc_security_group_ids = [ aws_security_group.sg.id ]

depends_on = [ aws_efs_mount_target.mountEFS ]

tags = {

Name = "task2-OS"

}

}- Step 7: Uploaded files to GitHub account ..

- Step 8: We need to attach the EFS storage to EC2 Instance which were created before … To attach EFS , we need to install some packages in EC2 Instance ..

To install the packages , we need to login into the EC2 Instance ,.. In terraform code , we have provisioner called “ remote-exec ” … Using this we can login into the Instance and Install the required Packages …

Here is the code …

resource "null_resource" "efs_attach" {

depends_on = [

aws_instance.os

]

connection {

type = "ssh"

user = "ec2-user"

private_key = tls_private_key.my_instancekey.private_key_pem

host = aws_instance.os.public_ip

} provisioner "remote-exec" {

inline = [

"sudo yum install -y httpd git php amazon-efs-utils nfs-utils",

"sudo systemctl start httpd",

"sudo systemctl enable httpd",

"sudo chmod ugo+rw /etc/fstab",

"sudo echo '${aws_efs_file_system.efs.id}:/ /var/www/html efs tls,_netdev' >> /etc/fstab",

"sudo mount -a -t efs,nfs4 defaults",

"sudo rm -rf /var/www/html/*",

"sudo git clone https://github.com/KumarNithish12/Cloud-task2.git /var/www/html/"

]

}

}

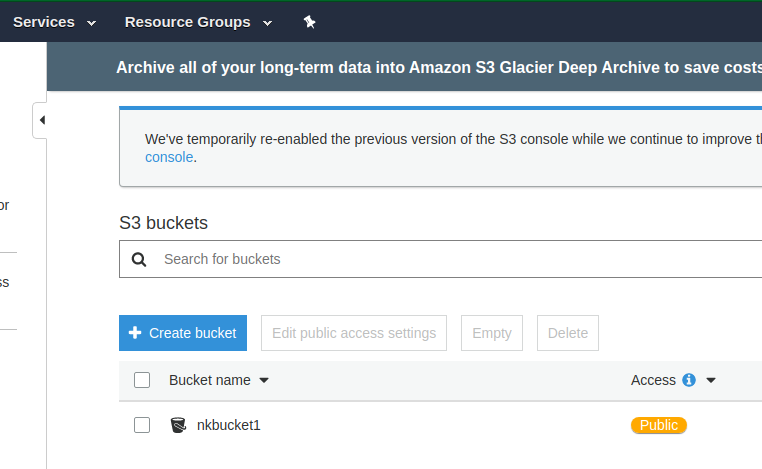

- Step 8: Creating S3 bucket and upload the images whatever u have … In my case I have uploaded only one image … For this first we need to clone the github repository into our local system to upload the image to S3 bucket … To run any commands in local System / Base OS , we have a provisioner called local-exec …

Here is the code …

resource "null_resource" "cloning" {

depends_on = ["aws_s3_bucket.s3_bucket"]

provisioner "local-exec" {

command = "git clone https://github.com/KumarNithish12/Cloud-task2.git"

}

}resource "aws_s3_bucket_object" "myimage" {

depends_on = [null_resource.cloning]

bucket = aws_s3_bucket.s3_bucket.bucket

key = "image"

source = "/home/nithish/terraform_code/task2/Cloud-task2/modi.png"

acl = "public-read"

}

- Step 9: Creating Cloud Front : is a content delivery network (CDN) offered by Amazon Web Services. Content delivery networks provide a globally-distributed network of proxy servers which cache content, such as web videos or other bulky media, more locally to consumers, thus improving access speed for downloading the content.

Here is the code …

resource "aws_cloudfront_distribution" "cloud_front" {

origin {

domain_name = "${aws_s3_bucket.s3_bucket.bucket_domain_name}"

origin_id = "S3-${aws_s3_bucket.s3_bucket.bucket}"

}

enabled = true

is_ipv6_enabled = true

comment = "cf using s3"

default_cache_behavior {

allowed_methods = ["DELETE", "GET", "HEAD", "OPTIONS", "PATCH", "POST", "PUT"]

cached_methods = ["GET", "HEAD"]

target_origin_id = "S3-${aws_s3_bucket.s3_bucket.bucket}"

forwarded_values {

query_string = false

cookies {

forward = "none"

}

}

viewer_protocol_policy = "allow-all"

min_ttl = 0

default_ttl = 3600

max_ttl = 86400

} ordered_cache_behavior {

path_pattern = "/content/*"

allowed_methods = ["GET", "HEAD", "OPTIONS"]

cached_methods = ["GET", "HEAD"]

target_origin_id = "S3-${aws_s3_bucket.s3_bucket.bucket}"

forwarded_values {

query_string = false

cookies {

forward = "none"

}

}

min_ttl = 0

default_ttl = 3600

max_ttl = 86400

compress = true

viewer_protocol_policy = "redirect-to-https"

}

restrictions {

geo_restriction {

restriction_type = "none"

}

}

tags = {

Environment = "cloud_production"

}

viewer_certificate {

cloudfront_default_certificate = true

}

}

- Step 10: After Creating Cloud-Front , we need to update theURL in index.html which we cloned into EC2 Instance … For this we need same provisioner remote-exec ..

Here is the code …

resource "null_resource" "updating _url" {

depends_on = [ aws_cloudfront_distribution.cloud_front,

null_resource.cloning

]

connection {

type = "ssh"

user = "ec2-user"

private_key = tls_private_key.my_instancekey.private_key_pem

host = aws_instance.os.public_ip

}

provisioner "remote-exec" {

inline = [

"sudo su << EOF",

"echo \"<img src='https://${aws_cloudfront_distribution.cloud_front.domain_name}/${aws_s3_bucket_object.myimage.key }' width='600' height='500'>\" >> /var/www/html/index.html",

"EOF"

]

}

}That’s it … Done with coding part …

Now save the code and Initialize terraform using cmd : “ terraform init ”

Now run the cmd : “ terraform apply --auto-approve”

Outputs :

→EC2 Instance …

→ Key pair …

→ Security Group …

→ EFS storage …

→ S3 Bucket …

→Cloud Front …

Now copy the Public IP of your Instance … In my case it is 13.233.100.160

And paste it into the browser ….

Successfully Done with task ….

Here is the GitHub Link for code …

Thanks for reading ….

If any queries related to this task , connect with me on LinkedIn

…. Signing Off ….