Face Recognition using the concept of Transfer Learning ….

Transfer Learning is not a new concept. Ever since us humans began to train machines to learn, classify and predict data, we have looked for ways to retain what the machine has already learnt.

Learning is not an easy process, not for humans and not for machines either. It is a heavy-duty, resource-consuming and time-consuming process and hence it was important to devise a method that would prevent a model from forgetting the learning curve that it attained from a specific dataset and also lets it learn more from new and different datasets.

Transfer learning is simply the process of using a pre-trained model that has been trained on a dataset for training and predicting on a new given dataset.

“A pre-trained model is a saved network that was previously trained on a large dataset, typically on a large-scale image-classification task.“

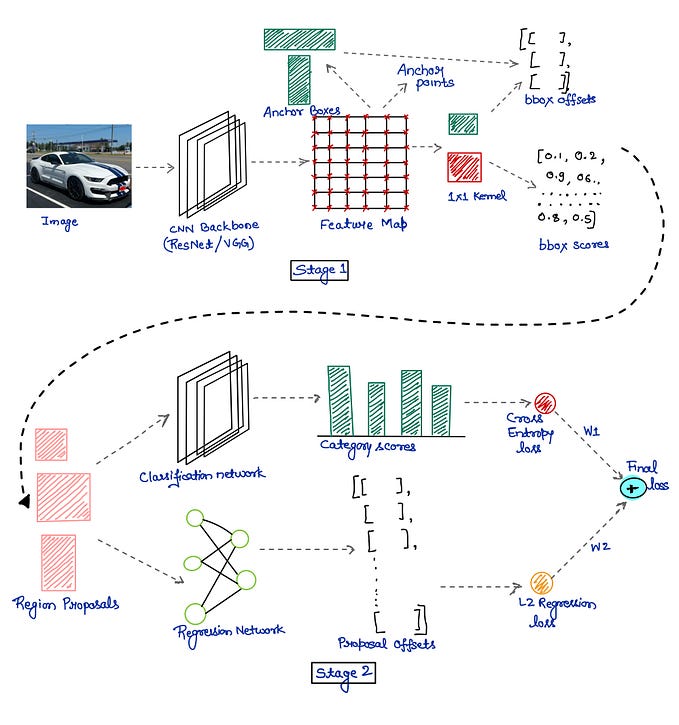

Task→ Create a project using transfer learning solving various problems like Face Recognition, Image Classification, using existing Deep Learning models like VGG16, VGG19, ResNet, MobileNet etc.

MobileNet :

We shall be using Mobilenet as it is lightweight in its architecture. It uses depthwise separable convolutions which basically means it performs a single convolution on each colour channel rather than combining all three and flattening it. This has the effect of filtering the input channels. Or as the authors of the paper explain clearly: “ For MobileNets the depthwise convolution applies a single filter to each input channel. The pointwise convolution then applies a 1×1 convolution to combine the outputs the depthwise convolution. A standard convolution both filters and combines inputs into a new set of outputs in one step. The depthwise separable convolution splits this into two layers, a separate layer for filtering and a separate layer for combining. This factorization has the effect of drastically reducing computation and model size. ”

I used MobileNet Deep Learning model in this task to recognize faces ….

Collected dataset from my Friends … Lets us go to Hands-on part ..

Step 1: Import MobileNet pre-trained model from net .. And freeze the layers which are already trained …

The For loop prints all layers in pre-trained model ….

Step 2: Now we have to add the layers which we required … For this I used function … Also used SOFTMAX Activation function , coz for detecting diff. faces means classification right !!! ..

Step 3: Import required libraries from TensorFlow and Keras and also provide the no. of classes means how many faces u r going to detect ….

Here the cmd : FC_Head → we are attaching the pre-trained model and our layers in the function called nk() … finally print model …

Step 4: Now things are ready , we need to train the new model ( pre-trained layers + our layers ) .. here we need a image dataset generator … For training the model , provided tarin dataset and for validation , provided test dataset …

Step 5: Now fit the model ….

Step 6 : After fitting the model , we need check whether the model is perfectly tarined or not ….First we need to save the model …

command →model.save(“task4.net”)

Step 7: Load the model and predict the faces by picking random images from test dataset … Here is the code for predicting …

Output :